Strolling through library shelves on graphs' edges

Contents

During development of the new African Studies Library online portal, I was faced with the task to implement an authority based search system. Without a more detailed specification, I first had to figure out, what that could be and how to implement it.

The authority data to be used were datasets provided by the German “Gemeinsame Normdatei”, GND for short. That is all fine and well, but nobody would enter GND-Identifiers like https://d-nb.info/gnd/121578437 in a search field, expecting any kind of meaningful result. That’s simply not practical. So let’s do something else. How about a search system delivering search results based on authority data as suggestions or recommendations? And no strange identifiers would need to be entered by users.

In this article, I want to outline some ideas and challenges faced during development. In doing so, I will differentiate between records, i.e. (bibliographical) data points in our database, and subjects. Subjects are subject headings associated with records and are authority data points. A record with such authority data, I will call annotated.

Strolling through shelves, finding random interesting books

Imagine visiting a library, looking for a book. You have a bit of time on your hands, so you stroll along the shelves and look at other books, in search of nothing in particular. The shelves are usually arranged somewhat based on what topics they contain, thus forming topical clusters. The longer your stroll, the further it takes you away from what you were looking for in the beginning, but not too far from your original topic of interest. Using a graph, a stroll like this could be replicated in software. If subject headings were unambiguous, i.e. GND-Identifiers, it could offer engaging and surprising suggestions to users, all related to the topic they’re looking for.

Using authority data to create a knowledge graph

I Suppose the subjects of annotated records can safely be used as a model for “shelves”. With that, a knowledge graph could be constructed that recreates topical clusters similar to what you might find in a library. Of the around 120,000 subjects used as subjects in my initial dataset, many included “similar terms”, “variant names” or “broader subjects” in their data. These relations could form a set of paths for the user to stroll on.

All that was left to do now, was choosing the right tools for implementation and cobbling together a quick proof of concept. Can’t be too hard, right? Since I was already somewhat familiar with ArangoDB, I chose it over Neo4J. An additional benefit of choosing ArangoDB is its multimodal model, allowing document storage alongside a graph or key-value storage. Thus, the graph traversal was simply something to be added “on top” of the original JSON data storage.

Trials and Tribulations – Mistakes Were Made

The “cobbling together a quick proof of concept”-part was not all that quick, I’m afraid…

Being a “quick proof of concept”, I put records and subjects into the same collection (i.e. “table” in the database). All edges connecting these data points were stored in a single collection as well. Similarities between subjects were not modelled at first. Traversions on this model were usually done in the form of:

Record --> Subject --> Record --> Subject --> Record --> ...

Suggestions were all Records on such a path.

This first proof of concept worked, but it had two major flaws. Firstly, it produced suggestions that could be way out of topic, almost as if any book in the whole library were chosen at random. And secondly it proved to be very slow, with queries taking seconds to complete if not timing out outright. With hindsight, this is easy to explain. The resulting graph had nodes with thousands of edges, since a single subject could be attached to more than 20,000 records. Subjects like “Africa” were especially bad in a dataset mostly concerned with records on Africa. This resulted in millions of possible paths, most only differentiated by a single item at the end of the path. Because ArangoDB uses binary search algorithms as “traversal methods”, each of these possible paths needed to be exhausted, i.e. each leaf in the search tree needed to be visited, before a new path was chosen. And while binary searches do scale well, they don’t scale that well.

A Topic Modelling approach

Unfortunately, the first proof of concept was somewhat of a mixed bag, especially performance-wise. So instead of having all edges in a single collection, I split the relations between nodes into three smaller collections. Only one of which needed to be traversed:

- IsSubjectOf – Denominating a relation from subject to record

- HasSubject – The reverse of the above

- IsSimilarTo – An undirectional relation between two subjects, based on their authority data. These are the edges to traverse, each representing a connection between the “shelves”.

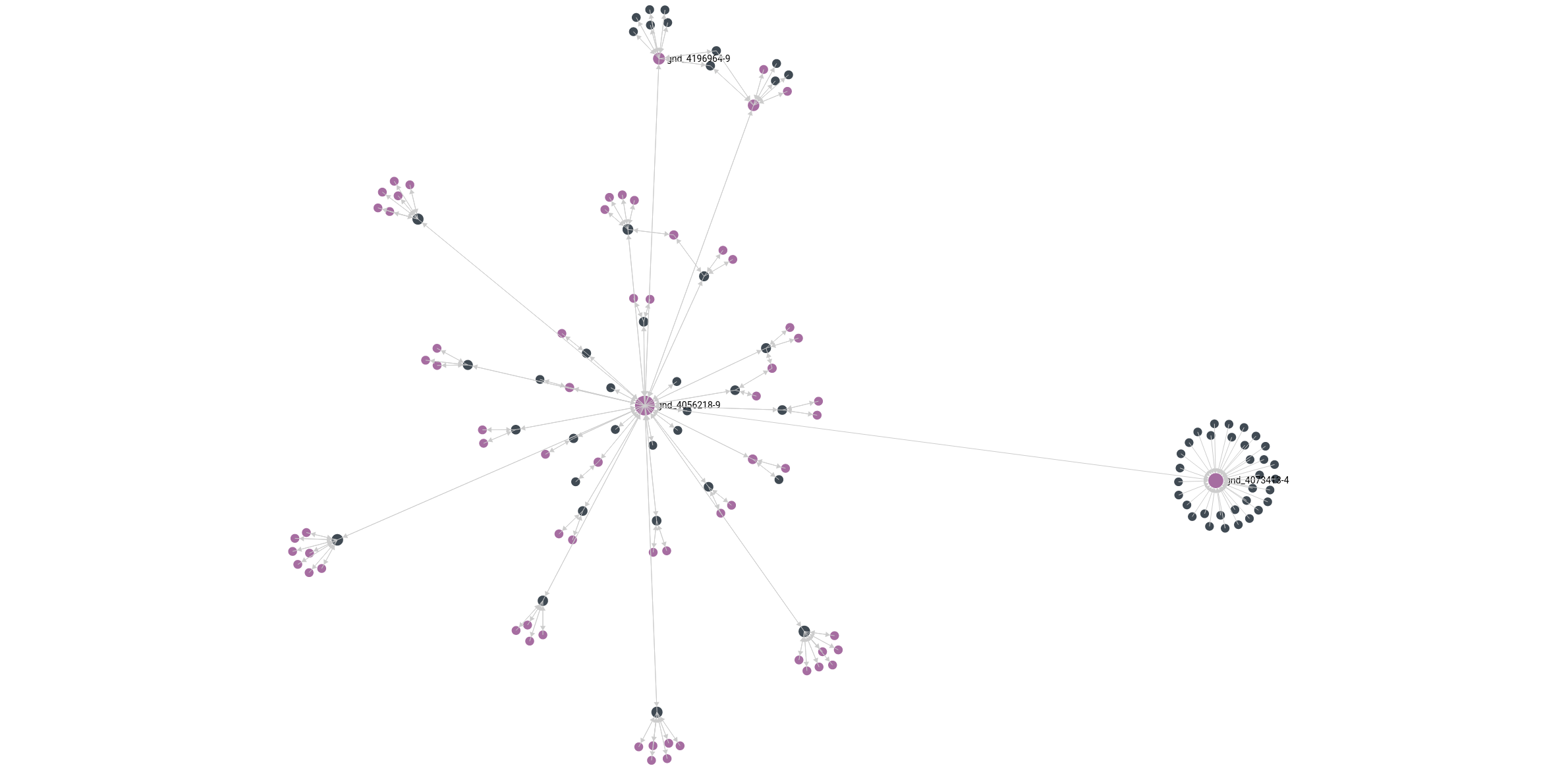

While IsSimilarTo is a gross oversimplification of the relationship between subjects, it was not meant to be precise. It represents any similarity between them, to build as many credible bridges between clusters as possible. This was necessary, because subjects seldom had more than 4 relations, most having none or one. The image below shows a section of our knowledge graph, illustrating the relationship between two topical clusters.

(Fig. 1: Section from our knowledge Graph. Black dots represent records, violet ones represent subjects.)

(Fig. 1: Section from our knowledge Graph. Black dots represent records, violet ones represent subjects.)

Instead of traversing from record to record, as I did in the proof of concept, I changed the traversion to something more alike the stroll described earlier: travelling on connections between subjects. Since a Subject had at most four relations, this resulted in a small number of possible paths to stroll. Of these, some were shorter, some longer, but restricted by a maximum length and all starting at a random subject of the record currently on display. Since each path was equally valid, I deemed it bad to have them ranked, but had one chosen at random. The graph was then traversed along this randomly chosen path of subjects and on each stop a record was chosen via a random IsSubjectOf edge. This was repeated until at least six suggestions were taken (without duplicates), to be displayed on the records page in our portal.

As expected, performance was much better, with query times seldom exceeding 60ms.

The whys and wherefores

While this approach now delivers the desired results, some questions might need addressing. I will take some time to answer two that came to mind.

Why not rank the paths?

The better question would be why rank paths of equal validity. Were I to rank the paths I’d need a ranking system first, an adjacency matrix perhaps. Such matrices were for instance employed in Google’s Page Rank, creating weights for the edges in a web-graph. Assuming each record had a rank, it would then hand down a potion of its rank to its subjects via HasSubject. These ranks would then form the adjacency matrix of a record. The same would need to be repeated for IsSimilarTo. Even if all records had the same rank, sorting the paths by their scores (for instances the combined weights of their stops) would result in some paths be shown more often than others, favouring subjects with higher ranks, i.e. a greater number of records attached to them. This creates a filter bubble, where discourses and clusters with a plethora of records get suggested more frequently, further reducing the visibility of publications that already are quite invisible in the first place. Adjusting ranks for “invisible” records is certainly possible, but it would open up more questions and problems.

Why have a static system that does not recommend based on a user’s interests?

Taking user data into account would result in a system more tailored to a specific user’s interests. While this can be a good thing, the filter bubble created is not, especially in a scientific setting. Furthermore, evaluating a user’s click path prerequisites explicit permission by the user to do so. So constantly tracking a user’s interactions with our portal, simply to create suggestions slightly more tailored to a user’s interests, seems inappropriate.

Further development

The system could possibly benefit if more GND-datapoints where added, without records attached to them, to create additional paths between clusters that are currently “unrelated”. While creating more paths, it’s difficult to avoid muddying the waters, since clusters that are related by several jumps are not really topically related at all.

Another possible use for the system is the possibility of some sort of “I’m feeling lucky” approach, where a user can simply press a button and be presented with a totally random path and all records along the path pulled from the database. This might help a scholar suffering from a lack of inspiration to find a new area of research for themselves, or simply have fun with the records we offer. At any rate, the system described can be seen only as a stepping stone for further improvement in the years to come.

Written by Yannic Jäckel. Last Modified on 2021-09-15.